Self Hosting With an Enterprise-Level Approach

Put your heart into doing the little things right.

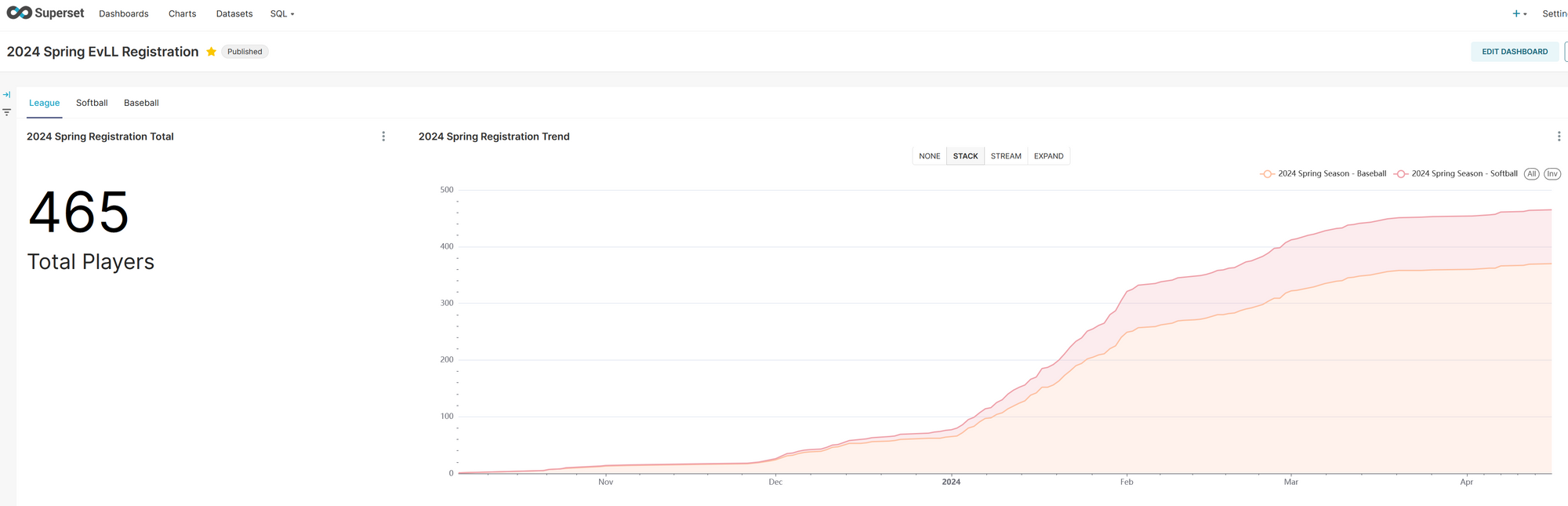

As someone who thrives on tech challenges, I embarked last year on a meaningful project: helping the board of directors for my local little league make better, data-driven decisions about participation. You might wonder why a youth sports league needs data analysis, but in today’s world, data is the backbone of smart decision-making, even in community activities. Whether it’s understanding which neighborhoods have the most sign-ups or tracking participation by age group, this data helps guide important choices. And since data is as valuable here as it is in any enterprise, I knew I had to treat the project with that same level of care and professionalism.

From a Virtualization Playground to a New Superset VM

I’ve always viewed my home lab as a serious environment for experimentation. Sure, I test new things for fun, but the approach is always professional—after all, what’s the point of learning if you’re not doing it right? My Proxmox server has become a foundational part of my home lab, providing a reliable platform to host virtual machines and containers, mimicking enterprise-level infrastructure.

This time, however, I had a very real and community-driven need: the little league’s board was swimming in data but didn’t have the right tools to analyze it. They were using spreadsheets, and while functional, those tools couldn’t provide deep insights. I knew the answer lay in Apache Superset, a powerful open-source data visualization tool.

Setting up a Debian VM on Proxmox was the first step. Using the same care I would apply to an enterprise system, I ensured that the virtual machine was efficient, stable, and configured for long-term use. The focus wasn’t just getting something running—I was building a production-grade environment. Superset needed to be easy to manage for the board and scalable as their data grew. I followed best practices, ensuring that security settings, resource management, and network configurations were set up properly. After all, just because it’s self-hosted doesn’t mean it should be treated casually.

With a little data and some dashboards, our Superset site was showing value.

Data Safety: No Shortcuts on Backup Strategy

With Superset up and running, I turned my attention to a crucial aspect of any data project: backup and disaster recovery. Whether you’re running a home lab or managing an enterprise system, data loss is catastrophic. In the little league’s case, the PostgreSQL database behind Superset contained valuable participation data, the kind of insights that shaped decisions about everything from equipment to league schedules.

I knew I needed to approach backups with the same seriousness as I would in any business setting. That meant no shortcuts, no “good enough” solutions. The database needed to be backed up regularly, securely, and in a way that was easy to restore.

Here’s where my backup options got real:

- Proxmox Snapshots: This was my first thought. Proxmox makes it easy to take VM snapshots, which capture the entire system state. But, like in enterprise environments, I knew this wasn’t the ideal solution. Snapshots are heavy, resource-intensive, and not ideal for granular database backups.

- pg_dump: PostgreSQL’s logical backup tool was a more targeted solution. It allows for backups of just the database, making them smaller, faster to execute, and easier to restore. This was exactly what I needed for nightly backups of the participation data.

- Third-party cloud services like AWS S3 or Google Cloud Storage were tempting. But I’m a firm believer in self-reliance. Why hand over control of my backups when I had the hardware to handle it myself?

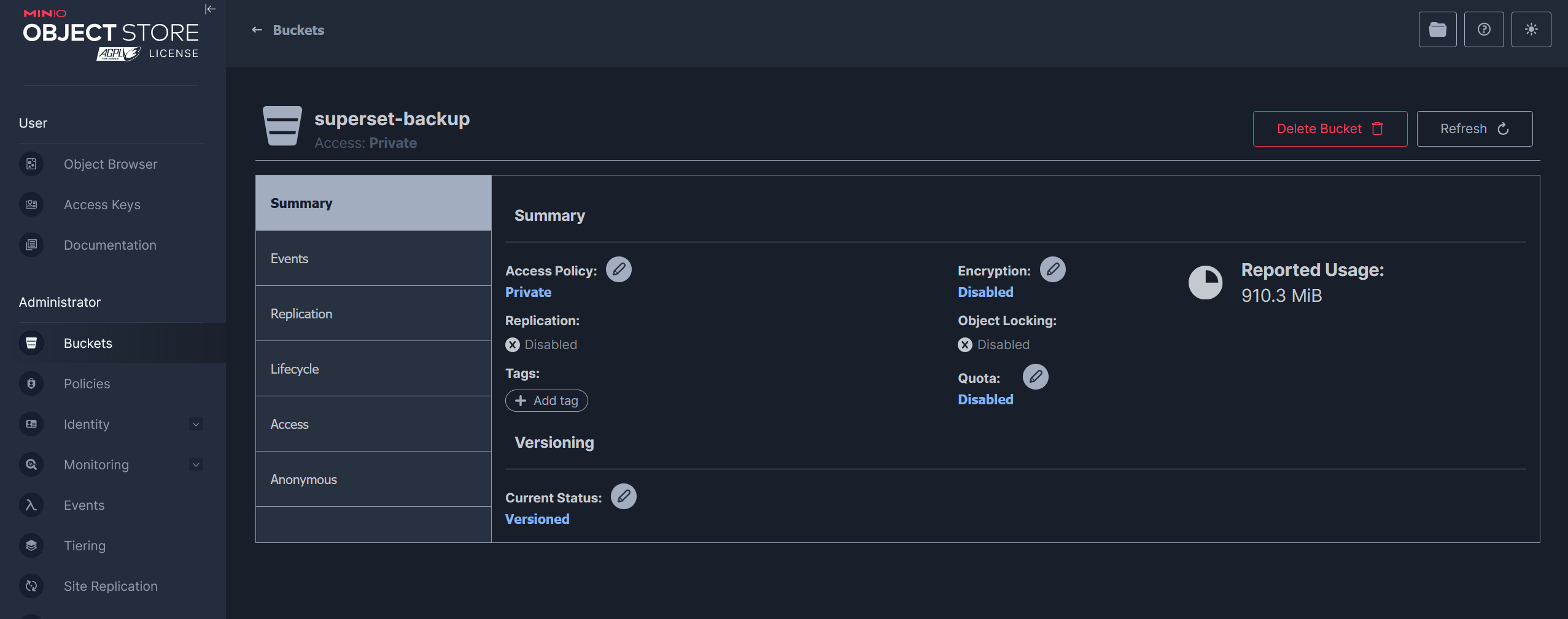

After some research I turned to MinIO, an open-source, S3-compatible object storage platform that I run on my NAS, separate from my Proxmox server. In an enterprise, you wouldn’t store critical backups on the same machine as the production system—that’s asking for trouble. So why do it in my home lab? Just as in a professional setup, separating the hardware for backups adds resilience. If something goes wrong on the Proxmox server, my backups remain safe and sound on a separate machine.

MinIO’s enterprise-grade capabilities, like S3 API compatibility and scalability, made it the perfect solution. I could configure it just like AWS S3, but without the recurring costs or external dependencies. And because it’s running on my NAS, I’m not tied to a third-party provider—I maintain complete control over my data. In terms of security and performance, MinIO on my NAS gives me the same confidence I’d expect from a cloud provider, but with the added benefit of local control.

The importance of redundancy and scalability in data storage can’t be overstated, whether you’re dealing with millions of transactions in a business or hundreds of players in a youth league. MinIO gave me the ability to scale as the little league’s data grows, ensuring that storage never becomes a bottleneck.

Automating with n8n: Bringing Workflow Automation to Backups

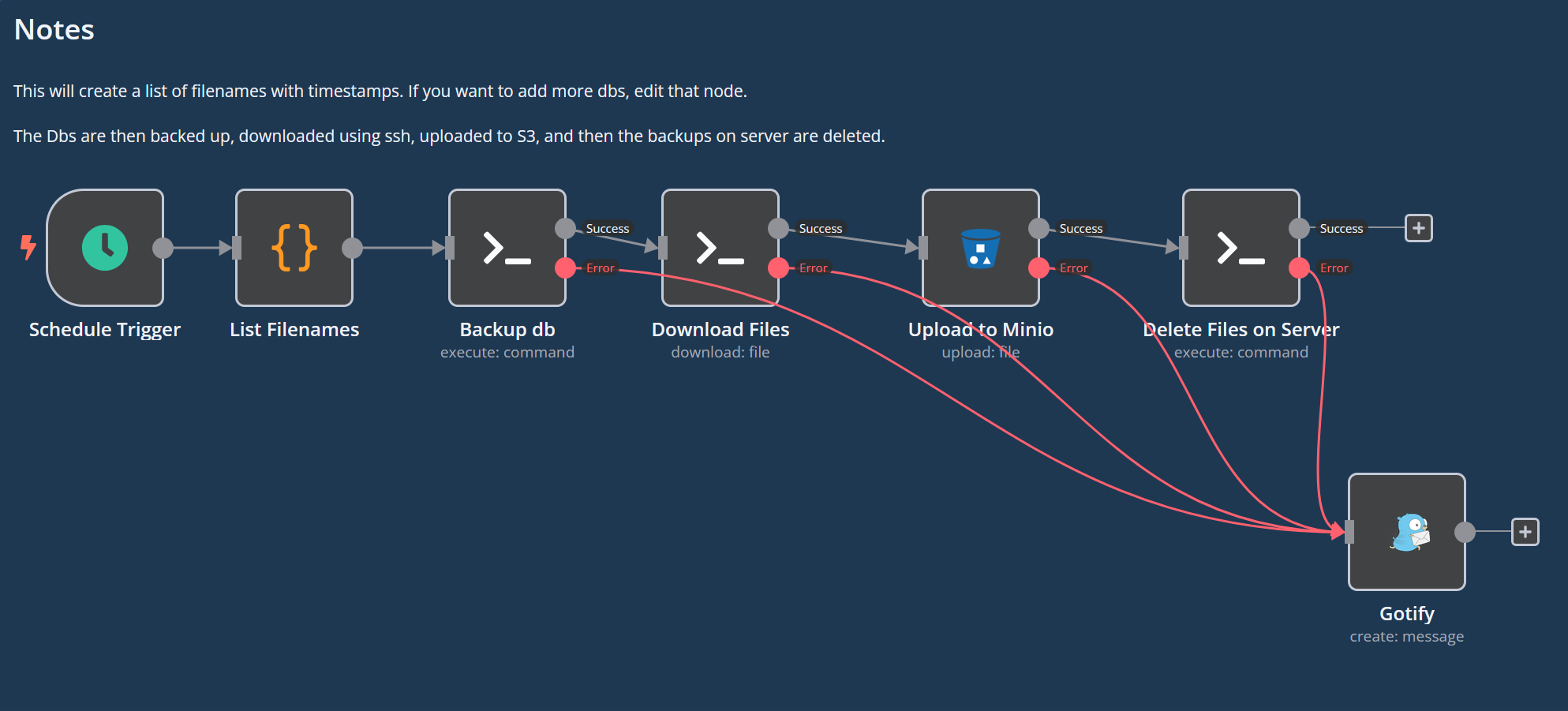

Once I had MinIO set up, I needed a reliable, automated process to ensure that backups were happening regularly and without fail. In enterprise environments, automation is key to reducing human error and improving reliability. My home lab follows the same principles.

For this, I used n8n, a powerful open-source workflow automation tool that I also host on my home infrastructure. n8n is like the glue that holds my self-hosted services together, providing a visual interface to build workflows that automate repetitive tasks. In this case, that meant orchestrating database backups.

Here’s how I set up the workflow:

- Scheduled trigger: Every hour, n8n kicks off the workflow to create a fresh database backup. This trigger acts like the enterprise-grade job scheduling tools used in businesses to run similar processes.

- pg_dump execution: The workflow uses pg_dump to take a logical backup of the PostgreSQL database that powers Superset as well as any other databases. Just like in enterprise-grade databases, consistency and data integrity are critical, so I configured the dump to run in a way that ensures a clean, reliable backup.

- Upload to MinIO: n8n then pulls the files into the workflow workspace before uploading the backup file to my MinIO bucket on the NAS using the S3-compatible API. By separating the storage location from the primary infrastructure, I’ve effectively created a resilient and distributed backup system, a best practice in enterprise IT.

- Cleanup: Leftover backup files can cause issues if not managed, so after the upload is successful the backup files on the server are removed so the VM disk space is not compromised.

- Notification: Signal to noise is an important component to manage, so n8n sends a notification to my existing gotify service when things fail instead of when the backup is complete, the result being I'm only notified when there is action to be taken. This acts much like the alerts you’d expect from enterprise systems, and I tested and verified this extensively, giving me full confidence that the workflow regularly runs smoothly.

Why Treating Self-Hosting Like an Enterprise System Matters

You might wonder why I go to such lengths in a home lab setup. The answer is simple: self-hosted environments deserve the same level of professionalism as enterprise systems. Just because it’s running in my home doesn’t mean it shouldn’t be resilient, secure, and automated. In fact, that’s precisely why I enjoy this work—there’s a satisfaction in knowing that I’ve built a production-grade solution on my own terms, without cutting corners.

Whether it’s the virtualization power of Proxmox, the data visualization capabilities of Apache Superset, or the resilient backup storage provided by MinIO and n8n, every piece of this setup is designed with longevity and reliability in mind. I’m not just throwing together tools to see what sticks—I’m following best practices that mirror the processes I’d use in any professional environment. As a result, the little league board now has the insights they need, and I have the confidence that the entire system is as solid as any professional-grade setup.

The key takeaway? No matter the scale, whether it’s a local sports league or a major enterprise, best practices apply everywhere. When you treat self-hosting with the same level of care as an enterprise system, you ensure that your infrastructure is built to last.